Research Group Quantifying Security

Quantifying security is one of the fundamental open problems in IT security research. In contrast to safety, it is not possible to take an overall statistical approach, as the adversary in IT security is intelligent, adaptive, and learns from the past. In the context of IT security, qualitative security analyses already allow us to deal with such intelligent adversaries. In the Research Group “Quantifying Security” (Q), we extend the state-of-the-art from qualitative to quantitative analyses. To this end, we first investigate possible quantitative indicators for security coming from the disciplines participating in this Research Group, i.e., cryptography, IT security, formal methods, and economics. Examples of quantitative indicators include the coverage of formal verification or a partial order for security notions, e.g., in the setting of anonymous communication.

Quantitative indicators are of particular interest if analyses cannot be fully performed or completed, e.g., due to a system’s complexity or because of the existence of vulnerabilities. In such settings, the analysis could indicate the severity of the vulnerability or help to prove the correctness of a fix, i.e., that no new vulnerabilities are introduced. In order to obtain a holistic quantification of a system’s security, the individual quantitative analyses have to be combined in an appropriate way. A particular challenge is to map the disciplinary quantitative indicators to a common indicator such as the risk or the cost of an adversary to attack the system. In the Research Group Q, we develop such common indicators and a methodology for the combination of the individual analyses. We work together with the other Research Groups and Security Labs for applications of our methods, e.g., to perform a quantitative security analysis of a demonstrator.

Research Area 1 – Cryptographic Aspects of Quantification

Research Area 1 – Cryptographic Aspects of Quantification

Involved PIs: Jörn Müller-Quade,Thorsten Strufe

Active Researchers: Laurin Benz, Robin Berger, Christoph Coijanovic, Yufan Jiang, Christian Martin, Jeremias Mechler, Marcel Tiepelt

The security of a system can be quantified using different artifacts like its code, its architecture or its protocol description. In the research are “Cryptographic Aspects of Quantification”, we are mainly concerned with quantifying the security of protocols by considering different security and privacy notions they achieve. We are also interested in the properties of basic building blocks such as cryptographic assumptions.

In contrast to properties like safety, which exhibit a statistical behavior that can be observed or tested, security and privacy need to deal with so-called intelligent adversaries that will perform any possible attack with probability 1. As a consequence, an empirical approach is insufficient. Fortunately, there exist very good mathematical models in which security and privacy can be proven, even in the presence of intelligent adversaries.

Privacy

Within the research area of anonymous communication, there is a wide variety of proposed protocols. Protocols differ in the privacy goals they target and achieve, making it difficult to find a suitable protocol for a given use case. We have proposed a unified framework of privacy notions, which formalises common privacy goals in anonymous communication and places them in a hierarchy. Since the relationship between two privacy notions is clear, privacy can now be compared between protocols.

For onion routing, one of the main primitives for anonymous communication, we showed that the state of the art in privacy formalisation is flawed, allowing attacks that break anonymity. We proposed a new set of formalised privacy properties that match the ideal functionality of onion routing.

.png)

Applying Quantifiable Privacy

We also proposed several novel anonymous communication systems for different use cases. For each system, we focused on provable privacy and comparison of privacy protection with the state of the art.

With Panini, we proposed the first anonymous communication system that implements the anycast communication pattern. We used an adapted version of Kuhn et al.'s privacy notions to quantify its privacy guarantees. With Pirates, we proposed a system that allows anonymous voice calls within groups of participants. Again, we used Kuhn et al.'s privacy notions to prove that Pirates has stronger privacy protection than related work, without requiring any trust in the infrastructure.

With PolySphix, we proposed a packet format for onion routing that is capable of efficient multicast communication. We adapted Kuhn et al.'s onion properties to show that this multicast capability does not reduce privacy compared to the state of the art in onion routing.

Privacy for Trajectory Data

The analysis of trajectory data has become a popular field of study thanks to its numerous applications, such as navigation and route recommendations or traffic jam predictions. However, trajectory data is highly sensitive as it contains information about where people have been, at what time, and with whom they meet, and must be protected.

In the field of trajectory protection, we have developed comprehensive systematizations of knowledge encompassing protection with differential privacy, and protection under syntactic privacy notions and differential privacy in the context of machine learning. We focused on the state-of-the-art privacy concept of differential privacy, covering various privacy and utility measures and providing a taxonomy of the different proposals in the current state of the art. Our research showed that the majority of proposals fail to achieve the level of differential privacy that is claimed.

We also examined the limitations and challenges of differential privacy and observed that potential solutions can be defined for general data structures. In this regard, we conducted a study of composition in differential privacy, a pivotal property of this concept that distinguishes it from other notions in the literature, and we provided generalizations to the well-known theorems. These new theorems enable us to establish more precise bounds for the privacy guarantees and measure the privacy bound in variable settings, which was previously not possible.

Cryptography

In order to prove the security of cryptographic building blocks, a mathematical definition of security needed. At first glance, such security notions often only seem to give a yes-or-no answer with respect to security, making them seemingly inapplicable for quantification. However, when taking a closer look, security notions can be used to quantify security by considering relationships between the notions themselves (in the sense of partial orders) or by considering the allowed adversarial influence. These aspects have been researched intensively. Also, the cryptographic building blocks used in protocols to achieve those notions for security and privacy can be quantified with respect to an adversary’s cost to break them.

One core focus of our research are composable security notions. To this end, we considered variants of the established Universal Composability notion due to Canetti.

We proposed a security notion where we can prevent the negative consequences of initially honest parties that are corrupted through hacker attacks over the network. For such attacks, the achieved security is strictly better than the established state-of-the-art. In order to achieve this strong security, simple trusted hardware modules are used. The resulting architectures are particularly amenable to quantifying an adversary's cost to break the security.

A different research avenue in the field of composable security notions wants to minimize provably necessary trust assumptions. We proposed a composable security notion where no central trust is required at all. In contrast to all previous notions for composable security in the plain model, our approach only requires certain assumptions to hold temporarily --- conversely increasing the costs of an adversary that must break them in very little time in order to break the security.

A second research focus is quantifying the cost of attacking cryptographic systems using quantum computers. This is motivated by the ongoing development of quantum technologies and the emerging threat of store-now-decrypt-later attacks, in which adversaries intercept and store encrypted communication today, with the goal of decrypting them in the future once quantum capabilities are sufficiently advanced. Since the development and standardization of quantum-secure protocols can teak years, it is crucial to begin preparing now.

We approach this threat from two perspectives. First, we study how quantum algorithms challenge computational assumptions in cryptography. The primary objectives of our studies are to (A) develop novel attacks that highlight vulnerabilities, and (B) estimate the concrete computational cost by quantum adversaries.

Second, we review the impact of quantum attacks on real-world cryptographic protocols, focusing on implications of transitioning from classically secure to quantum-secure primitives. Our objectives are to (C) quantify security of existing protocols under quantum threats and (D) improve existing constructions to offer provably stronger security guarantees than the current approach.

Challenging Computational Assumptions

An important ongoing effort in the development of quantum-secure standards is the Post-Quantum Cryptography and Signature competitions led by the National Institute for Standards and Technology (NIST), a process also acknowledged by the German Federal Office for Information Security (BSI). Within this competitions, our research focuses on evaluating the security of both cryptographic protocols and their implementations. For instance, we developed novel side-channel attacks exploiting decryption failures in early NIST competition candidates, demonstrating vulnerabilities in the Mersenne-based candidate Ramstake.

Conversely, our work also contributed to establishing confidence in promising schemes. By quantifying the cost of quantum attacks on lattice-based computational assumptions, our work suggests that the lattice-based schemes ML-KEM/Kyber and ML-DSA/Dilithium, which were standardized as Federal Information processing Standards in August 2024, provide security against quantum attacks. Notably, Kyber is already seeing widespread adoption, including its integration into mainstream applications such as the Chrome browser.

Impact on Real-World Protocols

One application we consider are Password Authenticated Key Exchange (PAKE) protocols, which enable two parties to establish a high-entropy cryptographic key using a shared low-entropy secret. These protocols are deployed in practice, for instance, they are used in European passports to securely access personal data and biometrics, and they form the foundation of the de-facto WPA3 standard for password-based access to WiFi networks globally. In our work, we develop formal methods to quantify the security of PAKE protocols in the setting of quantum adversaries. Moreover, we also propose protocol extensions that upgrade classically secure PAKE to provide some provable security even in the quantum setting.

Another line of research was carried out in collaboration with the German Aerospace Center (DLR), focusing on the security architecture of LDACS, Europe’s next-generation civil aviation communication system. LDACS responsibility will be to establish a secure link between ground stations and civilian aircraft, supporting functionalities such as automated positioning and navigation. In collaboration with researchers at DLR, we designed a new protocol that balances quantum security with the efficiency needs of radio communication in civil aviation. Additionally, our security analysis of the LDACS protocol shows that it achieves mutually authenticated key exchange secure even against quantum attackers.

Cryptographic Security Notions

On the theoretical side, we proposed a variant of Universal Composability that achieves so-called long-term security. Informally, a protocol is long-term-secure if computational assumptions need to be broken while the protocol is still running, preventing store-and-decrypt attacks. However, long-term security previously required very strong assumptions. In our research, we considerably weakened these assumptions.

In order to quantify the security of real-world systems, we research an approach to transform cryptographic security proofs to attack trees, capturing the assumptions of the model in a systematic way so that they can be mapped to security mechanisms of the real system. The resulting attack tree can then be further refined and used to quantify e.g. the costs of an adversary. Also, the attack tree can interface with other quantitative security analyses covering different parts of the system, e.g. its code. This research is applied in the Research Area “Security Analyses”.

Research Area 2 – Quantifying Architecture and Code (QuAC)

Research Area 2 – Quantifying Architecture and Code (QuAC)

Involved PIs: Bernhard Beckert (Head), Ralf Reussner

Active Researchers: Florian Lanzinger, Frederik Reiche, Samuel Teuber

Most formal methods see the correctness of a software system as a binary decision. However, proving the correctness of complex systems completely is difficult because they are composed of multiple components, usage scenarios, and environments.

In the QuAC project, we formalized and implemented a modular approach for quantifying the safety of service-oriented software systems by combining software architecture modeling with deductive source-code verification. We first formally analyze the source code to find weaknesses, i.e., inputs for which the software may behave incorrectly. We then combine these weaknesses with the model of the service-oriented architecture as well as the probabilistic usage scenarios of the system. This combined model can then be analyzed, leveraging our previous research on quantification, to compute the probability that the software will behave correctly.

To quantify the security of software, we extended QuAC with attack models. We model the capabilities and possible actions of an attacker based on the weaknesses found by our deductive verification. This security analysis has been applied in the Security Lab Energy, where we analyzed various security requirements using a model of EVerest electronic-vehicle charging stations. It has also been applied in Research Group Quantification, where we analyzed manipulation attacks on the software powering the CoRReCt demonstrator.

Research Area 3 – Economic Cyber Risk Analysis

Research Area 3 – Economic Cyber Risk Analysis

Involved PIs: Jürgen Beyerer, Marcus Wiens (Head)

Active Researchers: Ingmar Bergmann, Jürgen Beyerer, Pascal Birnstill, Jeremias Mechler, Ankush Meshram, Jörn Müller-Quade, Jonas Vogl, Paul Georg Wagner, Marcus Wiens

Economic Cost Assessment & Optimal Allocation of Defense Resources

Investing in cyber security should be both effective and efficient. This presents companies with non-trivial problems for several reasons.

The “Mapping-Problem”: Many types of risks (e.g., data breach) cannot be directly assigned to specific business processes, which is a prerequisite for impact valuation. Our approach is to apply Process-Value-Analysis (PVA) as an established method to determine the value of disrupted processes, either on an industry-level or a supply-chain-level (Kaiser et al. 2021a).

The “Prioritization-Problem”: It is intricate to prioritize system elements and potentially affected business processes, which is mainly due to the systems’ interconnectedness.

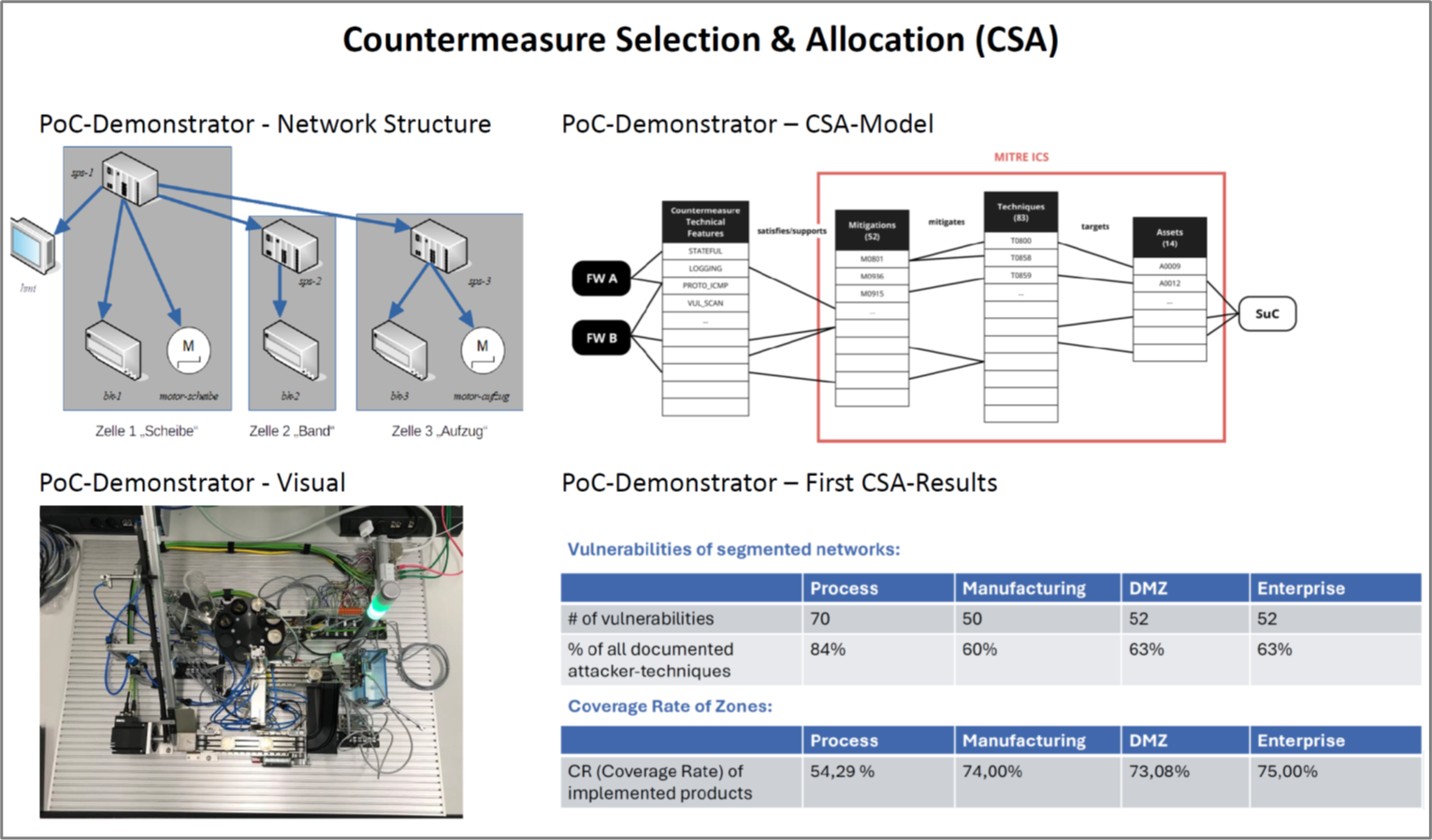

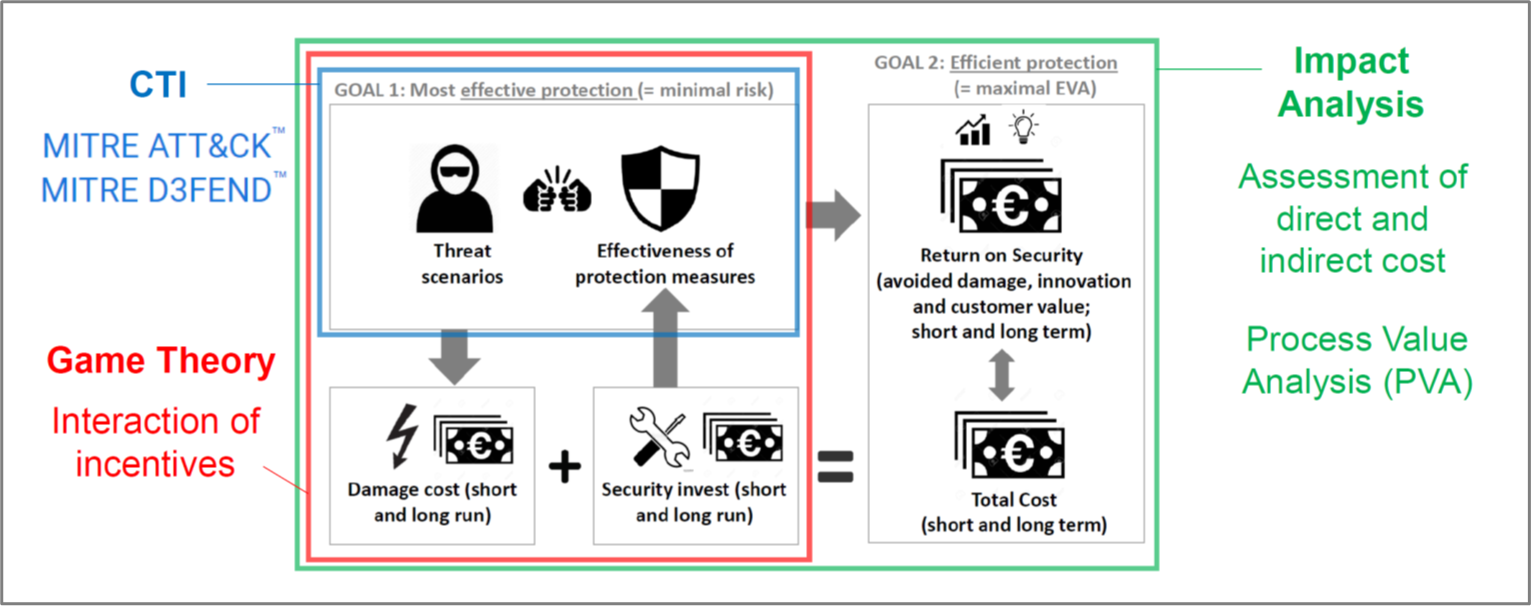

The “Scalability-Problem”: Even if you know which defensive measures to focus on primarily, these should often be scalable in practice. Scalability gives companies additional flexibility. On the market for security solutions, “off-the-shelf” products are not necessarily suitable for all companies and must be tailored to specific needs. For SMEs, this option makes some security solutions affordable. We approach the Prioritization-Problem and the Scalability-Problem by combining game-theoretic analysis, Cyber Threat Intelligence (CTI, Kaiser et al. 2023), and an approach for Countermeasure Selection & Allocation (CSA). The CSA-approach was developed for Industrial Control Systems (ICS), with the KASTEL-Production Lab PoC-demonstrator of Fraunhofer IOSB as a use case.

The “Assessment-Problem”: In order to arrive at a coherent risk assessment, it is difficult to assess the expected damage. Attack probabilities result jointly from a highly dynamic attack landscape and the system’s vulnerabilities; the consequences of cyberattacks include tangible and intangible, direct and indirect, monetary and non-monetary costs. We use Cyber Threat Intelligence (CTI) to monitor and simulate attack frequencies and attack targets. To improve cost assessment, we transfer our research from the areas of supply chain risk management and humanitarian logistics to the area of cyber risk management (Diehlmann et al. 2021; Kaiser et al. 2021b).

Game-Theoretic Analysis of Privacy & Security

Interactive assistance plays an increasingly important role in many modern production processes. Since these systems acquire and process information about human workers to assist them in their tasks, designing assistance systems in a privacy-respecting and secure fashion is a major concern. With the Production Lab 4Crypt-demonstrator of Fraunhofer IOSB, we develop and analyze interactive assistance systems that are both transparent—in the sense of being comprehensible for their users, but also in the sense of data protection duties of operators—as well as trustworthy—in the sense of being privacy-respecting and comprehensibly secure.

The game-theoretical analysis shows that an employer has a systematic incentive to make illegitimate requests to inspect the video recordings, which leads to discrimination, increased stress, and reduced trust among workers. This illustrates the particular relevance of the cryptographic solution. Looking at assistance systems through the lens of signaling games, it should not be possible to draw conclusions about the individual worker in the event of a failed approval (counterproductive signal). From a game theory perspective, the system is only information-proof if an independently acting works council is responsible for granting consensus. In companies without a works council (< 5 employees), this role could be assumed by an elected shop steward. However, this could not avoid the signaling effect.

The trust level of the workers is of great importance for the working atmosphere and labor productivity. The game-theoretical analysis confirms that the two most important sources of trust are (I) the “integrity of encryption components” and (II) the independence and consensus principle of information release. These requirements are generally not met by established video assistance systems, highlighting that 4Crypt makes a key contribution to increasing security and privacy at the workplace.

Research Area 4 – Security Analyses

Research Area 4 – Security Analyses

Involved PIs: Bernhard Beckert, Jürgen Beyerer (Head), Jörn Müller-Quade

Active Researchers: Pascal Birnstill, Felix Dörre, Florian Lanzinger, Christian Martin, Jeremias Mechler, Paul-Georg Wagner

The theoretical results in the above research areas are applied in the research area Security Analyses. The goal of our research is to not only show the practical validity and value of our results, but also to establish a (general) quantification methodology.

To this end, we are currently performing the following quantitative analyses:

- We performed a cryptographic security proof of the 4Crypt demonstrator in the Universal Composability framework. In order to extend this security proof to a quantitative analysis covering the actual real-world system, we are currently in the process of researching a methodology that extends cryptographic security proofs to attack trees in a systematic way, mapping security mechanisms of the model to security mechanisms of the real world. Using this attack tree, the costs of an adversary to break the security of 4Crypt relative to the considered adversarial model will be determined by quantifying the assumptions security relies on.

Additionally, we are also in the process of performing a game-theoretic analysis which quantifies the impact of the system as well as of the technical security measures on workers interacting with the system.

- For the CoRReCt demonstrator, we are applying the approach developed in the Research Area 4 QuAC to quantify the security of a critical component.

Research Area 5 – Network Resilience in Healthcare against Cyber-Attack

Research Area 5 – Network Resilience in Healthcare against Cyber-Attack

Involved PI: Emilia Grass

Active Researchers: Abhilasha Bakre, Stephan Helfrich, Aiman Zainab

This research addresses the urgent and growing challenge posed by cyber threats to digitally connected healthcare systems. As healthcare providers increasingly rely on interoperable digital infrastructure, the risk of cyber incidents with severe consequences for patient care, operational continuity, and financial stability has become a pressing concern. This research is developed in close collaboration with Imperial College London, UK Trusts and several hospitals in Baden-Württemberg, combining academic and clinical perspectives to ensure the practical relevance and applicability of its outcomes. We aim to understand how healthcare networks can be made resilient – not only at the level of individual hospitals, but across interconnected systems by integrating analytical modeling, simulation, and stochastic programming.

The project is grounded in the understanding that healthcare is uniquely vulnerable to cyber threats due to its complexity, reliance on outdated technologies, and chronic underinvestment in cybersecurity. The overarching goal is to develop a comprehensive framework that captures the propagation of cyber threats across hospital networks and assesses the cascading effects on clinical services, with a focus on ensuring patient safety. A major innovation of the proposal is the recognition that cyber-attacks can cause indirect harm beyond the initially affected facility, as disruptions force patient redirections and overwhelm other hospitals within the network. Building resilience, therefore, requires a systemic perspective that accounts for network interdependencies and operational dynamics.

This research was advanced through two core methodological contributions. The first is a stochastic optimization model developed specifically for the UK National Health Service (Grass et al., 2024). This two-stage model supports decision-makers in selecting optimal cybersecurity countermeasures under uncertainty. It anticipates future attack scenarios and incorporates the Conditional Value-at-Risk (CVaR) as a risk metric to account for low-probability, high-impact incidents. Numerical results from a realistic NHS Trust case study show that incorporating stochastic modeling yields more robust decisions compared to traditional deterministic approaches. In high-risk scenarios, the optimized strategies reduced the number of rejected patients by 44%, highlighting the model’s relevance to patient-centered cybersecurity planning.

Complementing this, the second strand of the research applied discrete event simulation to assess operational disruptions in the event of cyber-attacks, focusing on medical devices and emergency department workflows (Angler et a., 2024). The study modeled a hospital’s emergency department and evaluated how technology partnerships could improve cybersecurity readiness and recovery. By simulating different damage scenarios the study quantified the financial and non-financial impacts of cyber incidents, including lost patient revenue, increased length of stay, and staff overload. When technology partnerships were integrated into the model, recovery times improved by 25%, and estimated cost savings ranged from €245,579 to €315,768 over a 21-day period. These findings suggest that outsourcing certain cybersecurity functions to trusted partners with specialized expertise can offer a viable strategy for hospitals with limited in-house capacity.

The conceptual foundation for these models is supported by the development of the Essentials of Cybersecurity in Healthcare Organizations (ECHO) framework, presented in the Delphi consensus study (O’Brien et al., 2021). This study engaged 42 international experts to identify core components of a globally relevant cybersecurity readiness framework for healthcare providers. The resulting ECHO framework consists of 51 components organized across six categories, emphasizing governance, infrastructure, and organizational preparedness. It reflects the healthcare sector’s specific requirements and serves as a practical planning tool, particularly for institutions with limited resources or in lower-income settings.

In sum, this research contributes a novel, evidence-based approach to understanding and enhancing cyber resilience in healthcare. By combining risk-based optimization, process simulation, and expert-informed frameworks, it demonstrates that a network-aware strategy is essential to mitigating the impact of cyber threats. The findings emphasize that resilience must go beyond prevention to include robust recovery planning, capacity coordination, and cross-institutional collaboration. In doing so, the project provides actionable insights for healthcare providers, policymakers, and technology partners working to safeguard patient care in an increasingly digital and threat-prone environment.

| Name | Function | |

|---|---|---|

| Müller-Quade, Jörn | joern mueller-quade ∂does-not-exist.kit edu | Spokesperson |

| Mechler, Jeremias | jeremias mechler ∂does-not-exist.kit edu | Research Group Leader |